rqlite is an open-source distributed relational database, which uses SQLite as its storage engine. rqlite is written in Go and uses Raft to achieve consensus across a set of SQLite databases. It gracefully handles leader election, and can tolerate machine failure.

rqlite is an open-source distributed relational database, which uses SQLite as its storage engine. rqlite is written in Go and uses Raft to achieve consensus across a set of SQLite databases. It gracefully handles leader election, and can tolerate machine failure.

With the v3 release series, rqlite can now replicate SQLite databases on a global scale, with very little effort. Let’s see it in action using the AWS EC2 cloud.

Let’s build our global infrastructure

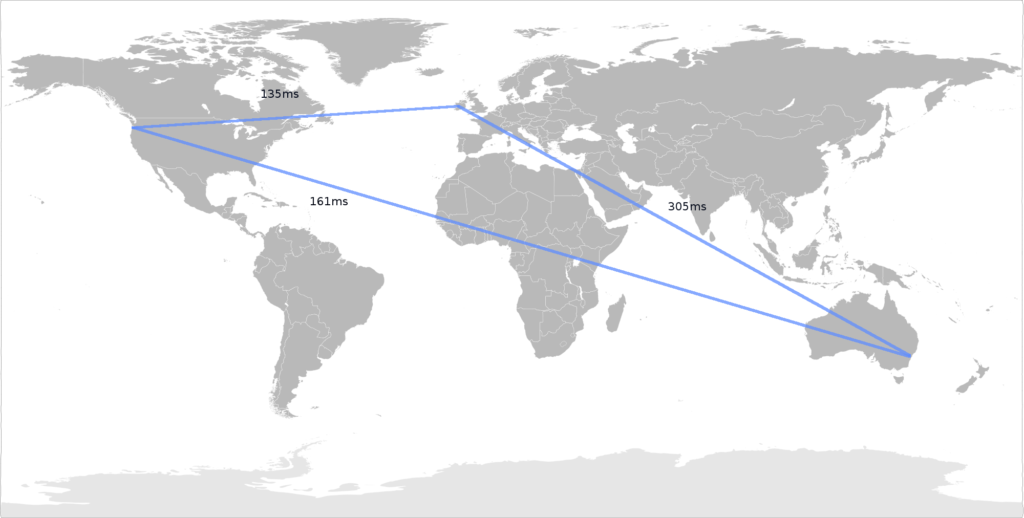

The EC2 system makes it simple to fire up a global presence, so I did just that. I launched 3 m4.xlarge instances, running Ubuntu 14.04 LTS — one in the USA (Oregon), one in Europe (Dublin), and, finally, one in Australia (Sydney).

I ended up with the following hosts:

| AWS Region | Public Hostname | Public IP | Private IP |

|---|---|---|---|

| USA (Oregon) | ec2-52-36-0-40.us-west-2.compute.amazonaws.com | 52.36.0.40 | 172.31.40.213 |

| Europe (Dublin) | None assigned | 52.51.50.174 | 172.31.32.210 |

| Australia (Sydney) | ec2-52-64-65-8.ap-southeast-2.compute.amazonaws.com | 52.36.0.40 | 172.31.6.75 |

For the purposes of this experiment, I made the Security Groups wide open, so there would be no problems with network access. Of course, you wouldn’t do this in a production environment.

Check the network

To get an idea of network latency, I ran some ping tests.

From Oregon to Dublin

$ ping 52.51.50.174 PING 52.51.50.174 (52.51.50.174) 56(84) bytes of data. 64 bytes from 52.51.50.174: icmp_seq=1 ttl=48 time=135 ms

From Oregon to Sydney

$ ping 52.64.65.8 PING 52.64.65.8 (52.64.65.8) 56(84) bytes of data. 64 bytes from 52.64.65.8: icmp_seq=1 ttl=51 time=161 ms

From Sydney to Dublin

Next, after ssh’ing in to each node, let’s install rqlite v3.1.0 on each node like so:

$ bash < <(curl https://raw.githubusercontent.com/otoolep/global-rqlite/master/install.sh)

The source of this script is available here.

Start the leader

Let’s choose to fire up the leader in Oregon, using the EC2 metadata system to retrieve the IP addresses. So I ran the following commands on the EC2 instance in Oregon.

$ PUBIP=`curl -s http://169.254.169.254/latest/meta-$ data/public-ipv4` $ LOCIP=`curl -s http://169.254.169.254/latest/meta-data/local-ipv4` $ ./rqlited -http $LOCAL_IP:4001 -httpadv $PUBIP:4001 -raft $LOCIP:4002 -raftadv $PUBIP:4002 $HOME/node

We now have a single rqlite node, with a real SQLite database underneath it. v3.1.0 includes the important options httpadv and raftadv, which allows the node to advertise an IP address other than the address it is bound to — in this case it advertises the public IP address of the EC2 instance, allowing other nodes to contact it from outside the AWS US Oregon region.

This node will accept commands, so let’s create a table and insert a record, using the rqlite CLI.

$ ./rqlite -H ec2-52-36-0-40.us-west-2.compute.amazonaws.com

rqlite> CREATE TABLE foo (id INTEGER NOT NULL PRIMARY KEY, name TEXT)

0 row affected (0.000336 sec)

rqlite> INSERT INTO foo(name) VALUES("fiona")

1 row affected (0.000074 sec)

rqlite> SELECT * FROM foo

+----+-------+

| id | name |

+----+-------+

| 1 | fiona |

+----+-------+

The rqlite CLI provides a very similar experience to the SQLite CLI. But for full functionality (such as bulk inserts and transaction control), use the HTTP API directly.

Create a cluster

There isn’t much point to a single-node cluster, so let’s add two more nodes. To do that I run the next commands on each of the two remaining EC2 instances:

$ PUBIP=`curl -s http://169.254.169.254/latest/meta-data/public-ipv4` $ LOCIP=`curl -s http://169.254.169.254/latest/meta-data/local-ipv4` $ ./rqlited -http $LOCIP:4001 -httpadv $PUBIP:4001 -raft $LOCIP:4002 -raftadv $PUBIP:4002 -join http://ec2-52-36-0-40.us-west-2.compute.amazonaws.com:4001 $HOME/node

Global replication!

With these simple steps, we’ve now got a globally replicated relational database. The new table and row have been replicated to the two new nodes. And, of course, every future change made on the leader SQLite database will be replicated synchronously to the other 2 nodes, placed out of harms way, thousands of miles from the leader.

Fault tolerance

This 3-node cluster can tolerate the failure of a node, and if that node is the leader, a new leader will be elected within a couple of seconds. If the cluster was 5 nodes in size, it could tolerate the failure of two nodes.

rqlite exposes a HTTP API, which the CLI uses. This API will also redirect clients to the leader node, if the node contacted is not the leader. You can connect the CLI to any node, and it will transparently redirect to the leader automatically, if necessary.

Good post and good project. I’m wondering, what other projects would allow 4 different websites with very high latency to use the same DB ? (not specifically sqlite)

Hi Jean — I am not specifically aware of any such projects.

Were the private IPs of the EC2 instances in Dublin and Oregon really the same or was that a typo?

Actually, it looks like a copy ‘n’ paste error — thanks. I’ll change one of the addresses so it’s clearer (though it wouldn’t matter if they were the same, with the networking setup shown above would still work fine).