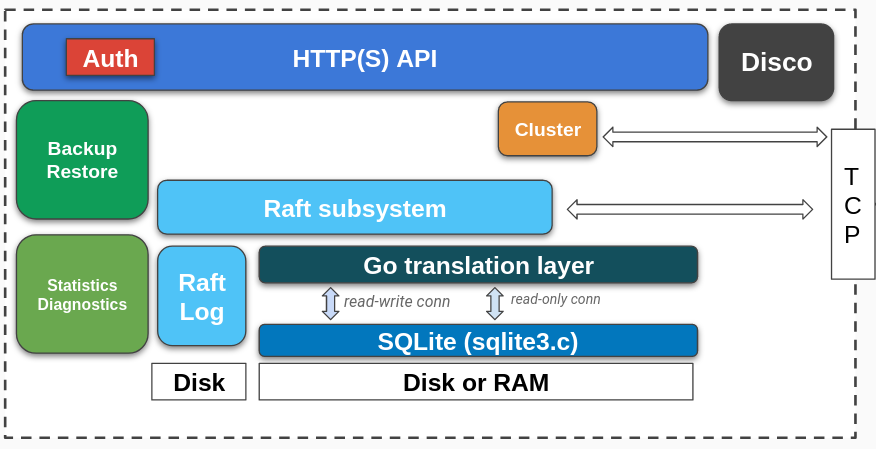

rqlite is an open-source, lightweight, distributed relational database, which uses SQLite as its storage engine. Written in Go, It’s trivial to deploy, and easy to use.

rqlite is an open-source, lightweight, distributed relational database, which uses SQLite as its storage engine. Written in Go, It’s trivial to deploy, and easy to use.

Release 7.5.0 adds Queued Writes. This is the first time rqlite allows users to trade-off durability for much higher write performance.

Why offer Queued Writes?

rqlite has been in development for about 8 years, and this is the first time it’s offering new write semantics. Up until this point rqlite was very opinionated — once a write request was acknowledged, rqlite guaranteed that your data was persisted in the Raft log, allowing you to be sure the data was replicated to the underlying SQLite database. This meant you could lose a node in your cluster, and remain sure your data was safe.

![]() With Queued Writes rqlite itself can now queue a set of received write-requests, internally batch them, and then write that batch to the Raft log as a single log entry. This is key — by putting more data in a single Raft log, all of those previously distinct requests now result in a single Raft transaction, reducing the number of network round trips to a minimum. This results in very large increases in write throughput due to fewer network hops and fsyncs. But it does mean that there is a small risk of data loss if a node crashes after the node queues the data, but before that data has been persisted to the Raft log.

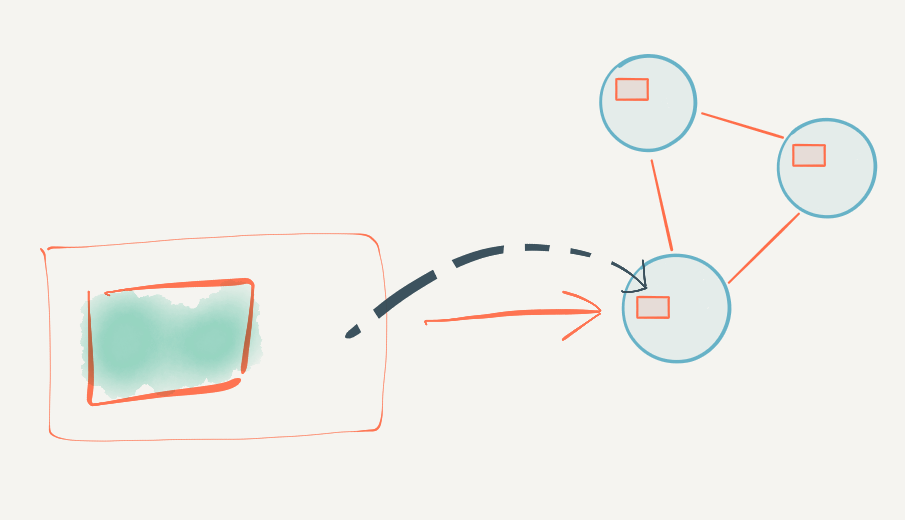

With Queued Writes rqlite itself can now queue a set of received write-requests, internally batch them, and then write that batch to the Raft log as a single log entry. This is key — by putting more data in a single Raft log, all of those previously distinct requests now result in a single Raft transaction, reducing the number of network round trips to a minimum. This results in very large increases in write throughput due to fewer network hops and fsyncs. But it does mean that there is a small risk of data loss if a node crashes after the node queues the data, but before that data has been persisted to the Raft log.

But taking all this account, I decided it could still be done, without any real conflict with the mission of rqlite.

A good use case

Why add Queued Writes? To start I was presented with a good use case. My concern had always been that clients wouldn’t be able to properly track if data was lost when a node fails. While it should be very rare, in the real world it will happen. But the use case I was presented with convinced me it didn’t matter because updates would be constantly happening and even if some row updates are lost, more updates to those same rows will arrive soon anyway. As a result it won’t matter if some previous updates had been lost — which again, should be very rare, if it happens at all.

But this same use case required much higher write-performance, over higher-latency network links. So adding an internal write queue was a worthwhile trade-off, unblocking rqlite for this application.

Clear separation in design and implementation

It’s very important that any new features don’t make the existing feature set harder to use, make rqlite confusing, or affect the robustness of the existing code.

It turned out that this was relatively easy to do. There has always been a clear separation between the API, Raft consensus system, and storage layer, within rqlite and this helped with the implementation. A new flag was added to the API, so existing users are unaffected by this change — users must explicitly enable Queued Writes. And the code added specifically to support Queued Writes is almost entirely separate from the code paths for non-Queued Writes, up until the point the data is sent to the Raft consensus system. And from that point on the code didn’t have to change at all.

The concept was already in place

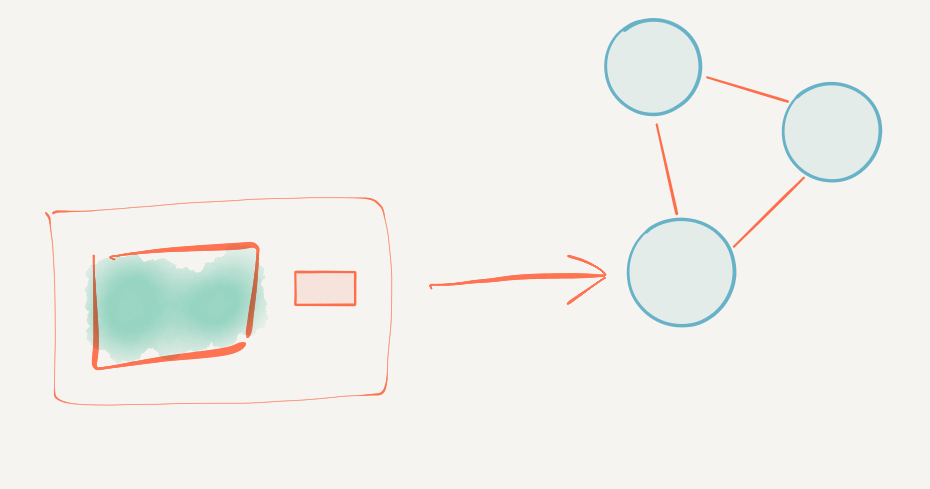

Finally, in a sense, the Queued Writes concept was already present within rqlite. From early on rqlite has supported a Bulk Update API. That API meant that clients could batch up write-requests on their side, before sending them to rqlite as a single HTTP request. This request would then be encapsulated in a single Raft log entry.

So in a sense this batching approach is simply copied from the client code to the database, allowing a client to choose where batching should take place.

Of course, it’s not exactly the same as when the queue is in the client’s code. The client does have less control, and a little less visibility into the state of each request, but for many use cases, the trade-off is the right thing to do.

Go implementation

For those of you interested in the implementation, it makes fairly conventional use of Go channels. You can find the Queue implementation here.

Results

Write performance

To test the performance of batching, I deployed a 3-node cluster, across 3 Google Cloud Platform zones. Previous testing shows this to the sweet spot of reliability and throughput — see my presentation to the Carnegie Mellon Database Group for more details on that testing.

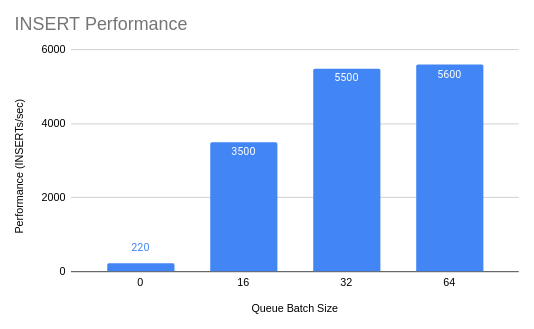

A simple performance test — not using Queued Writes — shows an INSERT rate of about 220 INSERTs per second. This testing uses rqbench, sending writes to the Leader. After switching to Queued Writes, performance shows a 15x improvement.

Increasing the batch size from 16 (the default) to 32 resulted in further performance increases, but after that the improvement was marginal — indicating that disk performance on each node became the bottleneck.

Of course, these results are not that surprising — it’s a common practise to batch up data to overcome network-related inefficiencies. But it’s still remarkable how much performance does increase if durability guarantees are decreased ever so slightly.

Load testing

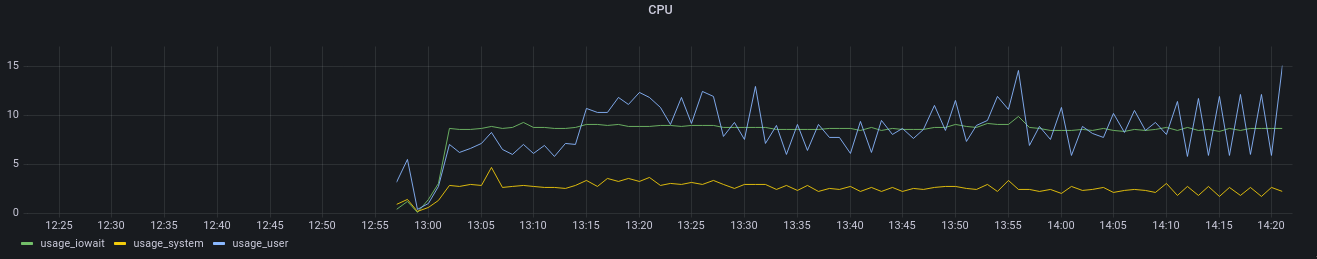

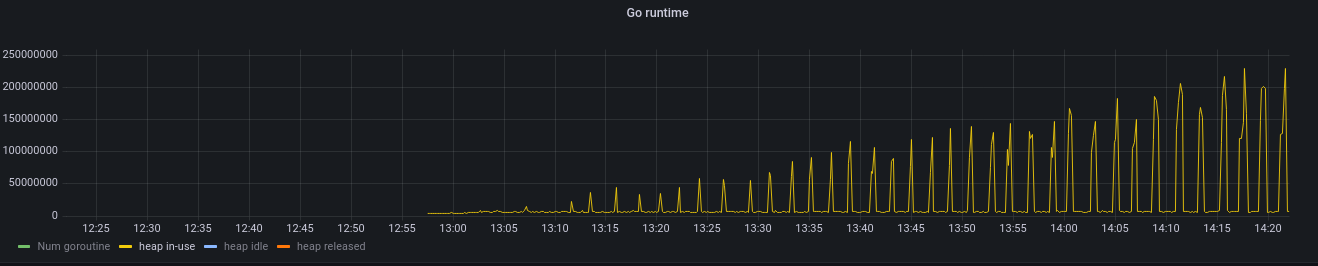

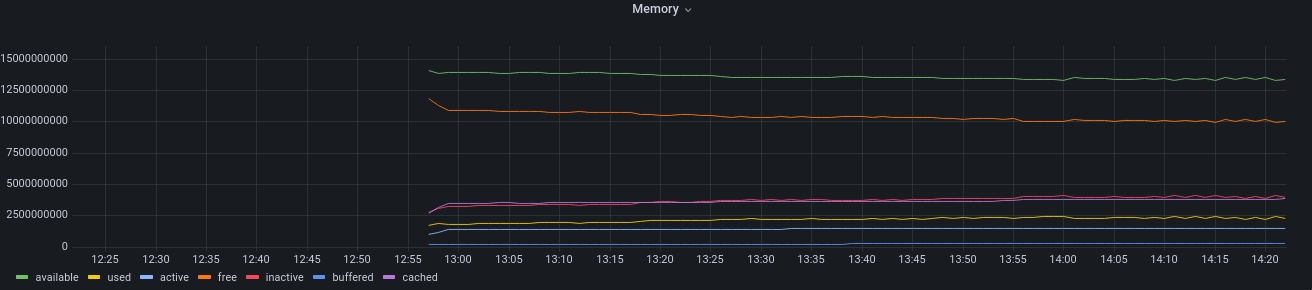

It’s important after a new feature is released to check that performance-under-load hasn’t regressed. A 2-hour load test shows that CPU, memory, disk, and the Go runtime, all look fine. During this test rqbench made Queued Write requests as fast as it could.

So check out the release 7.5.0, and try out Queued Writes on your high-latency network.