Did you know that SQLite contains a full text search engine? The SQLite FTS4 and FTS5 Extensions allow you to perform full-text searches on documents loaded into SQLite.

Did you know that SQLite contains a full text search engine? The SQLite FTS4 and FTS5 Extensions allow you to perform full-text searches on documents loaded into SQLite.

And since rqlite uses SQLite as its database engine, rqlite makes it really easy to deploy a highly-available search engine – and one you can interact with using SQL and HTTP.

What is rqlite?

rqlite is a lightweight, open-source distributed database that uses SQLite as its storage engine. Written in Go, it’s designed for easy setup, allowing you to create a cluster in seconds. Once clustered, rqlite can handle the failure of individual nodes without losing access to data, ensuring your system remains reliable and available when needed.

And because rqlite is built on SQLite, once you form a rqlite cluster, it’s means you’ve got a highly-available search engine too.

Let’s build a search engine

Let’s spin up a 3-node rqlite cluster — step-by-step directions for deploying this test cluster are available on GitHub.

Let’s spin up a 3-node rqlite cluster — step-by-step directions for deploying this test cluster are available on GitHub.

The step-by-step process is deliberately manual so you can see the details, but rqlite has extensive support for automatic clustering and discovery when running on Kubernetes, as well as with Consul, and etcd. You can also run rqlite using Docker.

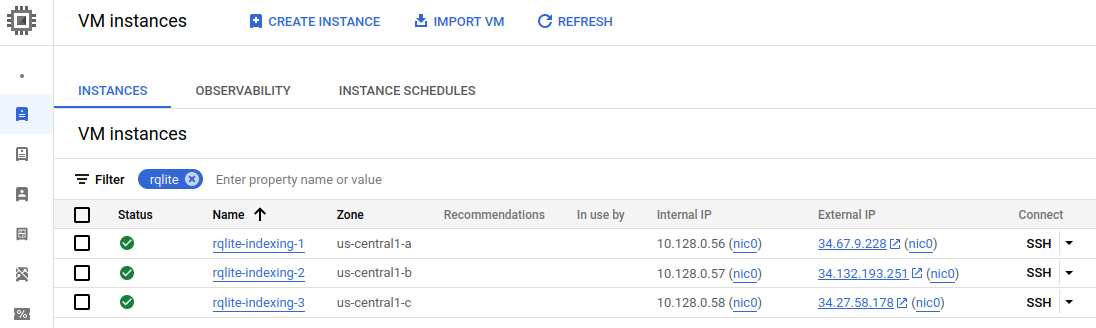

For this test, I deployed three virtual machines on Google Cloud Platform, each an e2-standard-4 type with persistent SSD storage. I placed each machine in a different zone to maximize fault tolerance, ensuring that the loss of one data center wouldn’t bring down the entire cluster.

Let’s index some Apache logs

Logs are a common use case for search engines. In this test, I used an Apache Access log file containing 5 million records. I wrote a simple Python program to read these logs and write them to rqlite.

Logs are a common use case for search engines. In this test, I used an Apache Access log file containing 5 million records. I wrote a simple Python program to read these logs and write them to rqlite.

What does the indexing program do? Not that much, which is part of its charm. It creates a virtual FTS4 table in rqlite (FTS5 would work well too), reads the Apache log file, and writes each line to rqlite. SQLite then does the indexing.

Increasing indexing performance

To increase indexing speed, I used rqlite’s Queued Writes, feature. It boosts write performance significantly, allowing the system to index logs much faster, but with minor trade-offs in durability. I also ran two indexing programs concurrently — with one instance writing the first half of the log file, and the second writing the other half.

I achieved indexing rates greater than 3,000 log lines per second, indexing 5 million logs in under 30 minutes. This kind of performance would be more than enough for many moderately-popular websites.

And now let’s search it

First let’s perform some initial examination of our data using the rqlite shell:

$ rqlite -H 34.67.9.228 Welcome to the rqlite CLI. Enter ".help" for usage hints. Connected to http://34.67.9.228:4001 running version v8.23.3 34.67.9.228:4001> .schema +-----------------------------------------------------------------------+ | sql | +-----------------------------------------------------------------------+ | CREATE VIRTUAL TABLE logs USING fts5(entry) | +-----------------------------------------------------------------------+ | CREATE TABLE 'logs_data'(id INTEGER PRIMARY KEY, block BLOB) | +-----------------------------------------------------------------------+ | CREATE TABLE 'logs_idx'(segid, term, pgno, PRIMARY KEY(segid, term)) | +-----------------------------------------------------------------------+ | CREATE TABLE 'logs_content'(id INTEGER PRIMARY KEY, c0) | +-----------------------------------------------------------------------+ | CREATE TABLE 'logs_docsize'(id INTEGER PRIMARY KEY, sz BLOB) | +-----------------------------------------------------------------------+ | CREATE TABLE 'logs_config'(k PRIMARY KEY, v) WITHOUT ROWID | +-----------------------------------------------------------------------+ 34.67.9.228:4001>

As you can see, when an FTS table is created, SQLite actually creates additional other tables.

Directly accessing the rqlite HTTP API is also easy. Let’s confirm we have the right number of logs, by querying a node in the cluster:

$ curl -G 'localhost:4001/db/query?pretty&timings' --data-urlencode 'q=SELECT COUNT(*) FROM logs'

{

"results": [

{

"columns": [

"COUNT(*)"

],

"types": [

"integer"

],

"values": [

[

5000000

]

],

"time": 7.038763355

}

],

"time": 7.041182592

}

rqlite took about 7 seconds to return the count — and there are five million records as expected.

How fast is search?

It’s fast. Very fast.

Before I kicked off the indexing processes I actually modified a single line at random in the Apache log file. I changed that line such that it contained the string “SuperSecretAgent”. Let’s see how long it takes for rqlite to locate that line.

We will do this search in two ways — firstly using LIKE, which doesn’t use full-text search, and then using MATCH, which does. We will go back to using the rqlite shell, and enable timings too.

$ rqlite -H 34.67.9.228 Welcome to the rqlite CLI. Enter ".help" for usage hints. Connected to http://127.0.0.1:4001 running version v8.23.3 34.67.9.228:4001> .timer on 34.67.9.228:4001> SELECT * FROM logs WHERE entry LIKE '%SuperSecretAgent%' 47.39.156.135 - - [01/Apr/2022:16:48:22 +0200] "HEAD /libraries/addurl.php HTTP/1.1" 404 0 "-" "SuperSecretAgent (http://www.owasp.org/index.php/Category:OWASP_DirBuster_Project)" "-" Run Time: 2.458101 seconds 34.67.9.228:4001>

I executed this query a few times, and it usually took around two second to return.

Now, let’s do a search.

$ rqlite -H 34.67.9.228 34.67.9.228:4001> SELECT * FROM logs WHERE entry MATCH 'SuperSecretAgent' 47.39.156.135 - - [01/Apr/2022:16:48:22 +0200] "HEAD /libraries/addurl.php HTTP/1.1" 404 0 "-" "SuperSecretAgent (http://www.owasp.org/index.php/Category:OWASP_DirBuster_Project)" "-" Run Time: 0.000214 seconds 34.67.9.228:4001>

That’s a big difference in speed – about 10,000 times faster! Of course, that’s the point of search, but it’s compelling demonstration of how much faster search can be relative to doing a full scan of the table.

Next Steps

You could test rqlite’s resilience by taking one of the nodes offline; the cluster will still operate smoothly, continuing to index data and respond to search queries. Once the offline node is back online, it will automatically rejoin the cluster without any need for manual intervention.

Additionally, you can improve how you model data. For example, using a JSON schema for the log data means you could convert the Apache log lines into JSON documents on the fly before indexing. This change would let you run more detailed queries such as sorting search results by timestamp, and performing basic analytics on the log data.

Get Started

To see what rqlite can do, download it and join the Slack channel for more discussions, support, and to collaborate with others.